Work in tech and you’ll hear a lot about Artificial General Intelligence (AGI) these days. You know the story - our rapid advancements in Artificial Intelligence will soon produce a computer whose thinking is on par with the human brain. The tantalizing logic leap is that general intelligence means human intelligence means we’re all replaceable, or worse, under existential threat. And the presumption is that the source of our unseating is the Large Language Model (LLM). But certainly we are more than the sum of our words?

Until about a week ago, I felt like I had a sufficient armchair enthusiast’s understanding of the basic functions of the brain. But then a handful of disparate articles found their way through my content filters, and challenged some of my core assumptions, some of which have implications on our attempts to create Artificial Intelligence, including:

- “Neurons that fire together, wire together” or “learning in the brain happens through strengthening synaptic connections between neurons” (oversimplified)

- The greater the degree of integration of information (knowledge accessible by the whole brain rather than a discreet part), the higher the level of consciousness (unproven)

- Dopamine is the “reward” or “pleasure” chemical (backwards)

- The cerebellum’s function is to control balance/movement (it does way more than that!)

Not only did all of this research call out to me how much we are rapidly advancing our understanding of the human brain, it made me realize that, regardless of when we hit the hallowed AGI benchmark - there is still so much more we need to understand, let alone implement computationally, to replicate the ability of the human brain.

The whole thing called to mind a passage I read from Malidoma Somé, a Dagara African writer and spiritualist, who critiqued Western Society as being “possessed” by its reliance on language:

“The power of nature exists in its silence. Human words cannot encode the meaning because human language has access only to the shadow of meaning" - Of Water and Spirit

Language is not knowledge. It’s the attempt to impart information to others. To rely on large language to demonstrate “general intelligence” is to ignore the vast breadth of capabilities humans employ to navigate and make sense of the world. And the more we learn about the brain, the more we understand how complex these processes are.

To provide some context to the core misassumptions I mentioned above:

Neurons that fire together, wire together

The brain is not like a neural network where the only thing that is “learned” or “updated” is the weights between neurons (“Neurons that fire together, wire together”). Learning also happens within individual neurons.

That’s bad news for anyone hoping to simulate a brain digitally. It means there’s a lot more relevant stuff to simulate (like the learning that goes on within cells). Source

According to the above article though, that’s not a bad thing for those looking to understand how learning works in the the brain - it implies that somewhere, inside an individual neuron, exists a physical object (or “engram”) which corresponds to a discrete piece of information. Which would in theory make discrete knowledge more accessible.

The greater the degree of integration of information, the higher the level of consciousness

- The predominant theory of what constitutes consciousness - Integrated Information Theory (IIT), was recently and widely labeled as pseudoscience. This is a tough problem, because consciousness is so subjective!. What is it like to be you or me? More importantly - why does it matter? Well, in the case of Artificial Intelligence, it might help answer the question “what would it take for a machine to become conscious?”

Opponents and proponents of IIT conducted an experiment in which participants were shown a series of pictures and symbols and asked to report when certain images appeared.

According to IIT, the task should prompt sustained activation in the back of the brain. However, there was only transient synchronization of activity between brain areas in the posterior cortex, not the sustained synchronization that was hypothesized. Source

In other words, much more study is needed, and there are dozens of other theories of consciousness lying in wait..

Dopamine is the “reward” chemical

According to this article, Dopamine does NOT generate pleasurable feelings. In fact, it is the other way around – pleasurable feelings generate dopamine. The desire for pleasurable feelings is encoded in the brain via dopamine.

Why does this matter?

The brain is a prediction machine, constantly trying to maximize expected gain. When our bets pay out, dopamine serves as a signal as to whether the outcome was better or worse than expected. It helps the brain learn from surprising information.

This logic is not something currently coded into a neural network - though it is of course (subjectively) represented in the human data annotation that happens during model training. This logic is, however, why we can’t stop checking social media feeds - a system in which we’re forever trying to predict whether, when, and how we’ll get socially valuable rewards.

The cerebellum’s function is to control balance/movement

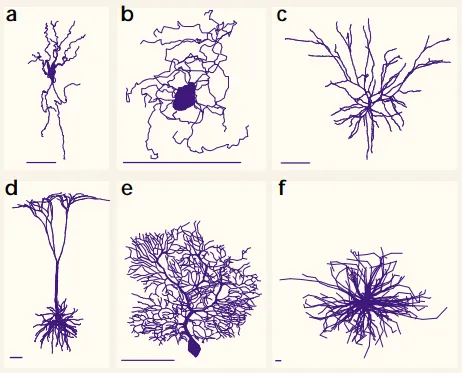

By studying patients with cerebellar disabilities, and isolating the functions of Purkinje cells (which exist uniquely in the Cerebellum, and are capable of single cell learning)researchers have determined that the cerebellum is the place in the brain where conditioned associations are learned Interestingly, the cerebellum contains 80% of all neurons, not surprising when you see that Purkinje cells (e, below) are vastly more complex than other neuron types:

To add a bit more clarity to the role of the cerebellum,

Fluent movement requires unconsciously, rapidly forming intentions to do multiple different things in sequence without having to stop to think between steps. The cerebellum is involved in the “readiness” or “anticipation” prior to making a mental action. Source

Again, we have nothing (as far as I’m aware) in our large language models which is constructed for preparedness. At best, LLMs respond to in-progress input with suggested word completions. To encode a realtime anticipatory response to current systems would have immense computational implications! Not to mention, if abstracted to realms of artificial intelligence beyond language, such as computer vision or robotics, anticipation requires something faster than sensory processing. In the brain, that’s the “virtual reality” generated by the cerebellum.

Whereas in other regions of the brain there are lots of recurrent loops and cross-connected neurons, “The cerebellum is ‘one and done’ — information goes in, through the Purkinje cells, and out.”

The independence of cerebellar modules makes sense given the need for speed — you can’t have long chains of interconnected neurons messing around if you want to give near-real-time model responses to control immediate action. Source

Again though, could this knowledge help advance our artificial intelligence approaches? One approach we might take from this in robotics, according to the author above, would be to perhaps include a fast feedforward-only predictive modeling step to control real-time actions, alongside a slower training/updating pathway for model retraining.

Regardless, it’s clear that there is more to simulating human intelligence than merely achieving AGI (a term commonly defined as “an autonomous system that surpasses human capabilities in the majority of economically valuable tasks.” Source). One thing is for certain, the more we learn about both the fields of Machine Learning and Neuroscience, the closer we get.